AI for archives browsing

Multimodal semantic analysis for the performing arts heritage

Performing art archives and collections are called to preserve and document a multimodal and multidimensional experience. The space, the sound, the lights, the performers, the objects, the audience, the music, the words are all interconnected parts of a performance, many times, in dialogue with other performances, or artistic works in general. For this, we want to go beyond indexing/cataloguing, and move forward to a paradigm of connectivity and multimodality, where vision, speech and sound are analysed as interrelated aspects of the performing work and are made searchable and accessible. Our focus is the use and reuse of the archive from the point of view of the performing arts practice and knowledge.

Our goal

Generate semantic information exploitable by the user of the archive. We have two main scope of application:

Browsing archives

Allow search based on multimodal semantic information.

3D Virtual Theatre

Allow customizable visualisation of semantic information on screen.

Archived data and in real-time during performances.

Technologies

Multimodal semantic analysis refers to the process of understanding, or making meaning, out of information in textual, sound, and visual modalities. AI technologies are used in this process, in order to automatise the extraction, classification and cross-reference of the information in large scale. This, leverages the efforts needed to perform manual description tasks, while allows to establish different criteria for looking into the archive. Training the algorithms and models on the parameters of the performing arts practice, means that we are creating AI that can look into the archive with the eyes of a creator, a scenographer, a dancer, a scriptwriter.

Audio analysis

Describe “what” and “when” in the semantic content of audio

Extraction of information from audio recordings, either speech, music or other events and events indexing.

- Text: named entity recognition, speaker diarization (indexing speakers and speeches), subtitles/script alignment

- Music: style, tempo and beat / metre, musical instruments, tonality, signing voice, music emotion

- Audio events: event description, audio roughness

Audio analysis © Inês Barahona e Miguel Fragata 2013, A Caminhada dos Elefantes

Language technologies

Automatically recognise speech and transcribe it into text, for greater accessibility or subtitles generation

Speech-to-text and speaker diarization off-line and real-time cases

- Automatically off-line transcribe the speech audio to text

- Automatically align the speech audio with an existing script

- Automatically real-time transcribe the streaming speech audio to text

- Automatically identify subtitle from the speech audio of a stream

NLP © Ricardo Pais 1998, Noite de Reis

Video analysis

Reach a tree dimensional understanding of a two-dimensional material

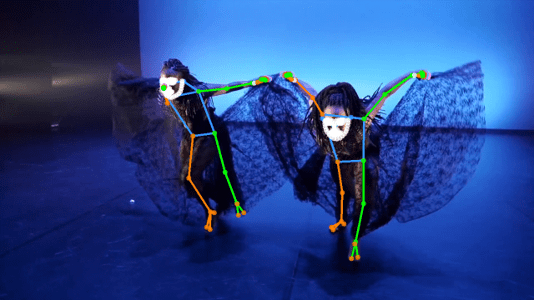

3D scene analysis and 3D pose trajectories estimation

- Object/human detection

- Human 2D/3D pose estimation

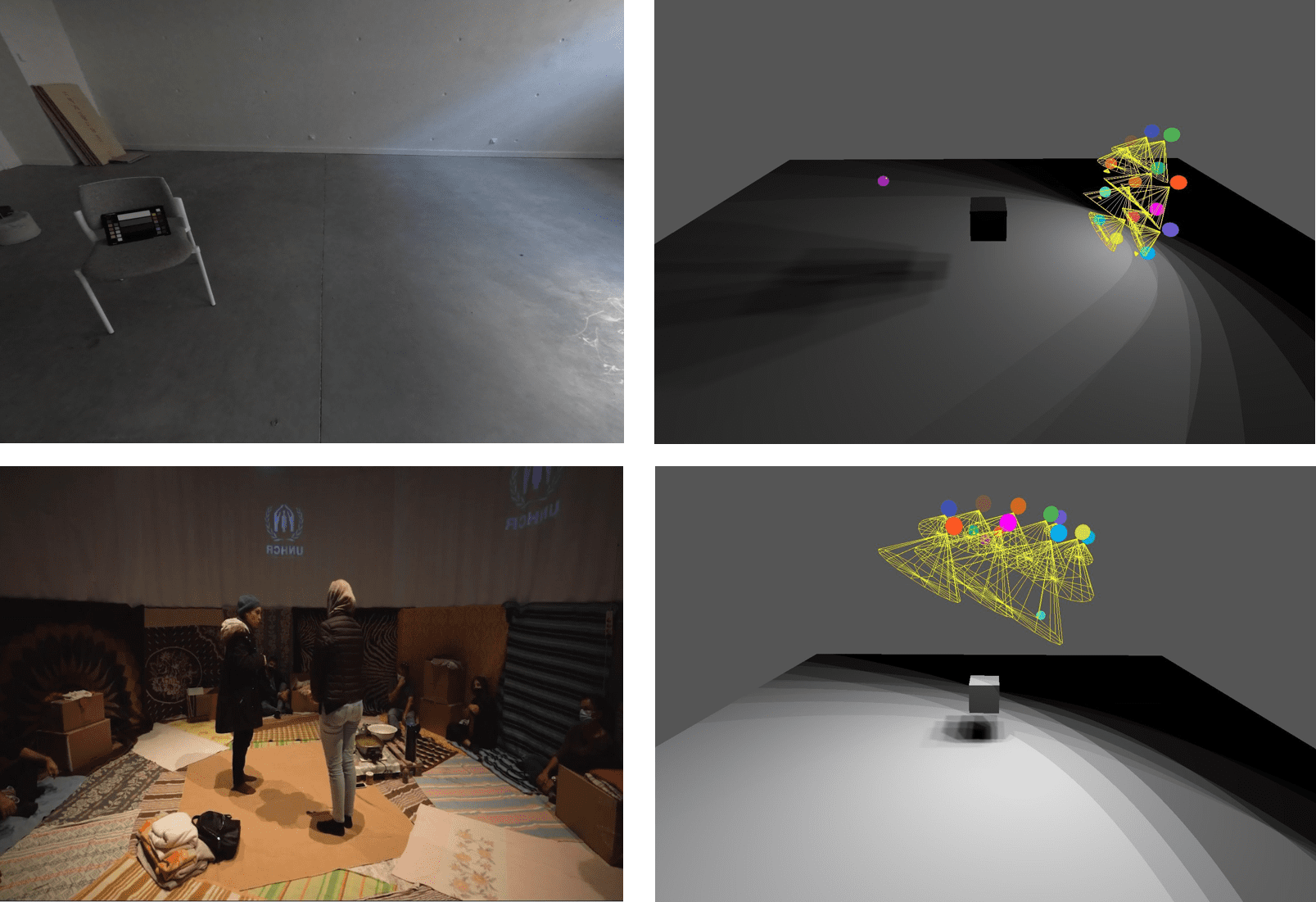

- Light color and position estimation

- Depth/3D layout of the room

- Human ID tracking

- Human motion

Light position estimation © Una Hora Menos 2021, Moria

Sentiment analysis

Analyse sentiment expression in different modalities

- Body motion analysis and synthesis, based on Laban Movement Analysis system

- Analysis of motions/sentiments

- Synthesis of motion (temporal composition, style transfer, long sequences)

- Motion generation through text

- Emotion recognition from face expression

- Text/Speech based human sentiment analysis

Cross-linkingEnable search across audio, video or text data Retrieval of content based on semantic relations across modalities

|

FORTHCOMING COMMUNITY CALLS

Are you working in the digital transformation in the performing arts? Join us in the coming months for three online gatherings! These events will be an opportunity to explore our latest research, connect with artists, venues, archivists, and other key stakeholders shaping this space.

Leave your email to receive an alert as soon as the dates are announced.

In practice

The application of these technologies in the performing arts collections is made on the basis of pretrained models, that are retrained and fine-tuned for the specific setting of dance and theatre, following the criteria established by the art institutions members of the project, and professionals that have participated in the user studies.

Semantic data crosslinking

Content management system

What the project proposes and develops is a platform that brings all these technologies together, upon a content management infrastructure. At a first moment, the platform generates AI annotations for the videos of the performances, coming from the data of the audio, video and text analysis. Then, human experts can enrich and correct them through a video annotation tool. At the end, the browsing interface offers the data of the work’s record and visualises them in live streaming. A user of the archive can not only watch a performance enriched with the semantic information, but can also search for objects, sounds, musical instruments, motion qualities or make queries of similar scenes.

The technologies and methodologies developed, consider that the challenges the archives face nowadays are not only technological, but rather include issues like IP management, sustainability of the infrastructures and need of resources and expert skills. For this, we aim to make the platform comprehensible, transparent and sustainable.

The vision driving these efforts is to allow broad access to the performing arts heritage and make the archive a place of knowledge and creativity. Museums, theatres, festivals or private collections host a heritage that allows us to understand how European societies have thought about their identity in different times and geographies, and to continue elaborating on it.

Who participates?

- Athena Research Center

- FITEI – Festival Internacional de Teatro de Expressão Ibérica

- Forum Dança – Associaçao Cultural

- Medidata.net – Sistemas de Informação Para Autarquias