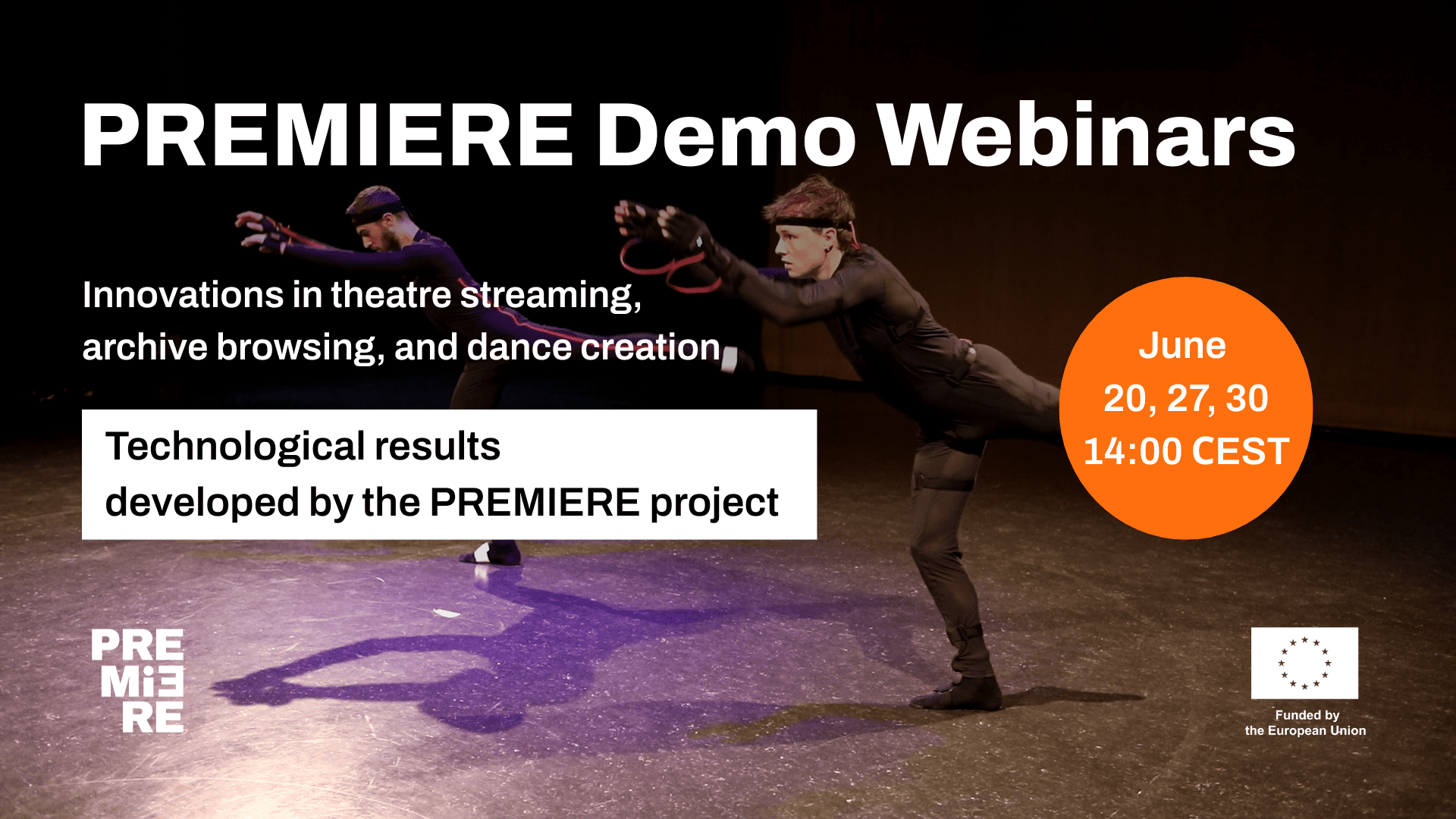

A new series of webinars is being launched to present the outcomes of the Premiere project.

Over the past three years, Premiere has brought together researchers and artists to explore how AI and XR technologies can support the full creative lifecycle of performing arts — from streaming and archiving to analysing and creating. The project has resulted in a range of innovative tools to browse archives, stream performances, transmit knowledge, and co-create dance works.

At the centre of this work is the 3D Virtual Theatre, a shared digital space that brings together all these tools. It not only enables streaming of performances in XR but also offers an interactive visualisation tool to explore past performances — including motion, sound, and speech data — in a three-dimensional environment and showcases the use of the AI Toolbox for dance creation.

What to expect:

Join us for a series of live demonstrations and conversations about how these tools were developed and how other researchers, cultural organisations, and creators can use them.

The three sessions will be held online, free of charge, and will run for approximately 1 hour.

Each session will have a 30 min Q&A.

Register & join us in the online discussions in June 2025

Webinar I

VR streaming and knowledge transmission in the 3D Virtual Theatre

Friday, 20 June 2025

14:00 CEST

Hosted by CYENS Centre of Excellence

We have explored how motion capture and VR streaming technologies can be adopted by venues and companies to enable virtual presence and interaction. In this webinar, we will present the two use cases we worked with. First, how performances can be streamed in a virtual environment, allowing audiences to join remotely — using VR headsets, web browsers, desktops, or mobile phones — and experience the event without being physically present. Second, how artists can meet in a shared virtual space to rehearse or exchange knowledge, supporting remote collaboration and co-creation. This work offers a roadmap and solution for institutions looking to explore digital stages.

Webinar II

Multimodal analysis and XR visualisation tool for performing arts archives

Friday, 27 June 2025

14:00 CEST

Hosted by Athena Research Centre, Laboratoire Hubert Curien (Université de Saint-Étienne), and Medidata.Net

A solution that delivers the insights of semantic analysis to archive users through a VR visualisation tool. By combining several state-of-the-art technologies for analysing performance videos, the tool streams recorded performances and provides access to a range of data derived from audiovisual analysis. Users can experience performances in VR and explore layers of information such as pose analysis, motion, trajectories, lighting, music, and text-related data within a three-dimensional environment. This includes a 3D reconstruction of the performers’ bodies, projected onto a virtual stage based on the original video. Overall, it showcases the potential of semantic technologies and innovation in enhancing the archive browsing user experience.

Webinar III

AI Toolbox for dance creation

Monday, 30 June 2025

14:00 CEST

Hosted by Instituto Stocos

Exploring generative approaches in the arts, this project presents a set of AI-powered tools designed to support creative processes in contemporary dance and offer new possibilities for artistic experimentation. These include tools for movement analysis that focus on idiosyncratic movement qualities, as well as tools that translate movement into other modalities through motion capture systems — enabling performers to make music with their bodies, control lighting conditions, or convert their movement into non-anthropomorphic avatars. Developed as open-source tools, by artists, for artists.