Incubatio is a transdisciplinary dance production created by Instituto Stocos, which was presented at Teatro Museo Universidad de Navarra on October 31, 2024. This production demonstrates the creative possibilities of the Dance Creation Environment, a set of modular software tools that facilitate and support the creative use of generative machine learning and extended reality within the performing arts. These tools were created within the European project PREMIERE.

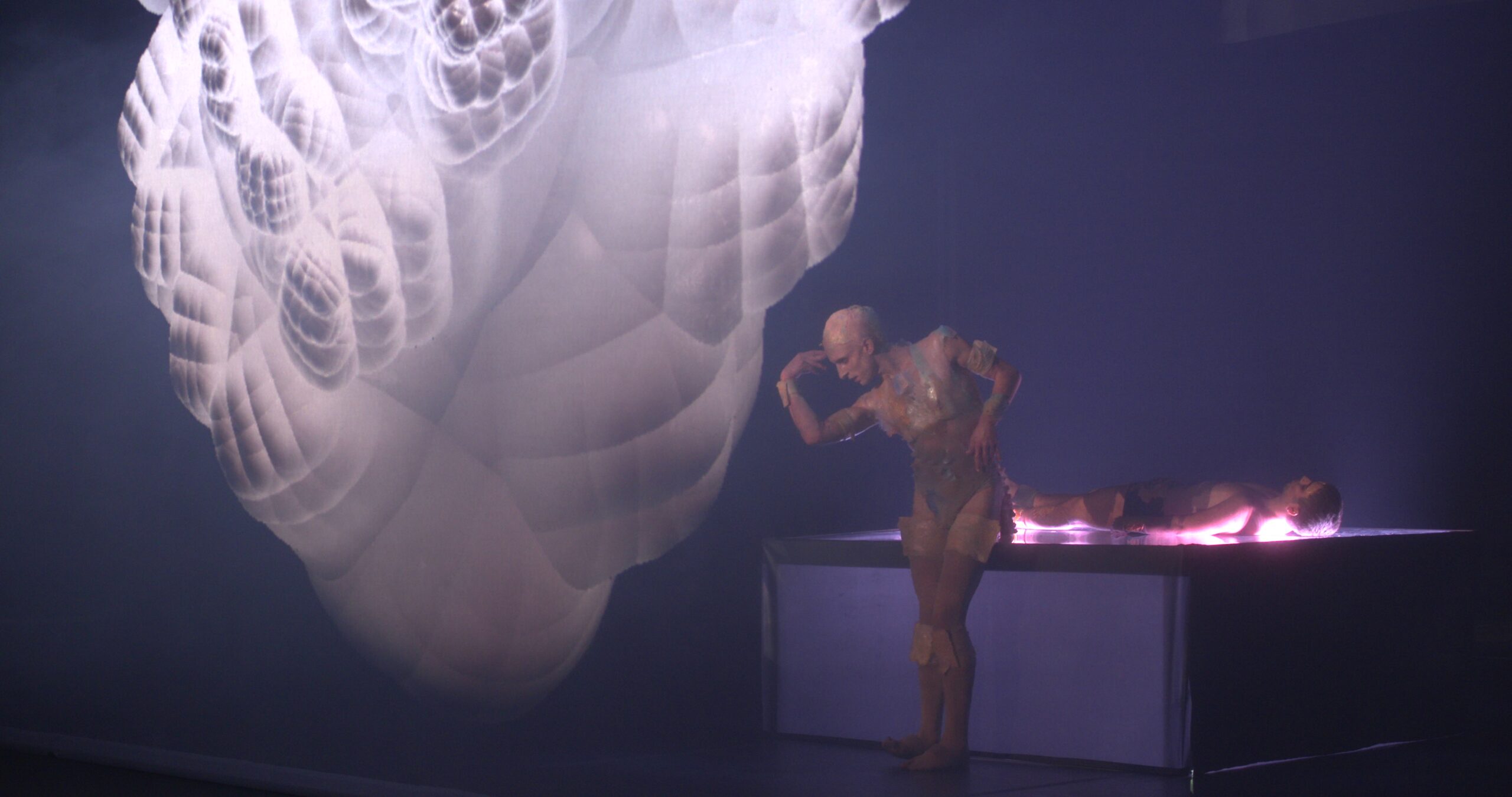

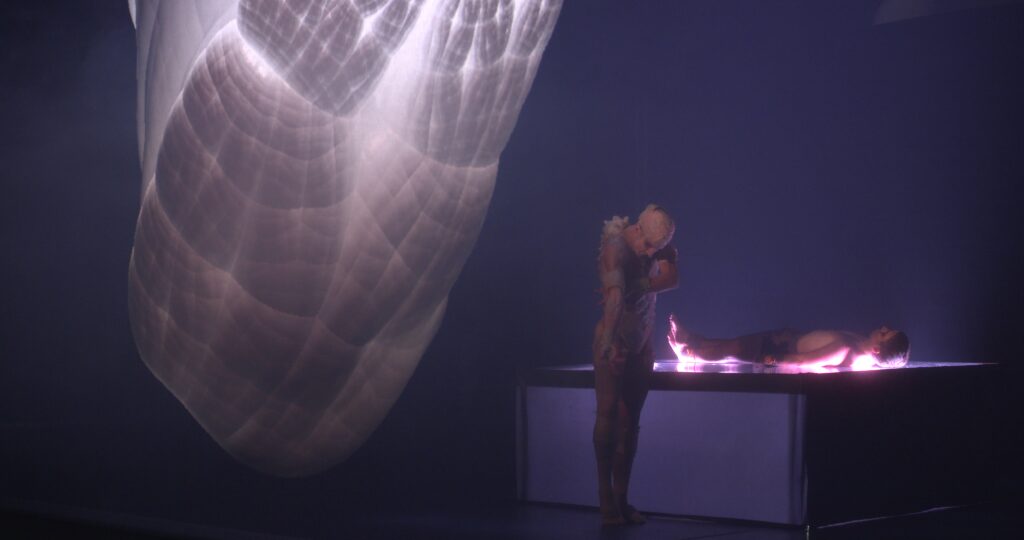

Incubatio is a work focused on recreating in a scenic format ancestral rites of Western culture that induce sleep episodes for healing purposes. These rites constitute probably one of the first documented psychotherapeutic techniques. Incubatio is a work that combines dance, electroacoustic music, artificial intelligence, extended reality and motion capture techniques to evoke archetypical imagery associated with the trance states induced by the rites.

Incubatio makes extended use of most of the functionalities provided by the Dance Creation Environment: movement analysis and synthesis, sound synthesis adapted for interactive movement sonification, image adapted for both movement visualization and the creation of immersive environments, and interactive control of robotic lights.

The production of Incubatio went through an iterative process during which the tools that form part of the Dance Creation Environment were adapted to the specific needs and goals of the piece. These goals were to convey through choreography, image, sound and light the experience of an Incubatio rate.

An Incubatio rite involves two people, the Iatromantis, a shaman or therapist who assists the mista, a person who enters the trance state. The piece is created for two dancers, each one wearing a motion tracking device. Two types of tracking systems were used. As a high-end system, XSens Awinda was employed to track the full body of a single dancer. As a low-end system, custom-made wearable acceleration sensors were employed to measure the motions of a dancer’s body extremities. The use of these two systems highlights the adaptability of the Dance Creation Environment and its suitability to integrate with both very sophisticated and precise motion capture systems and with simple and affordable systems.

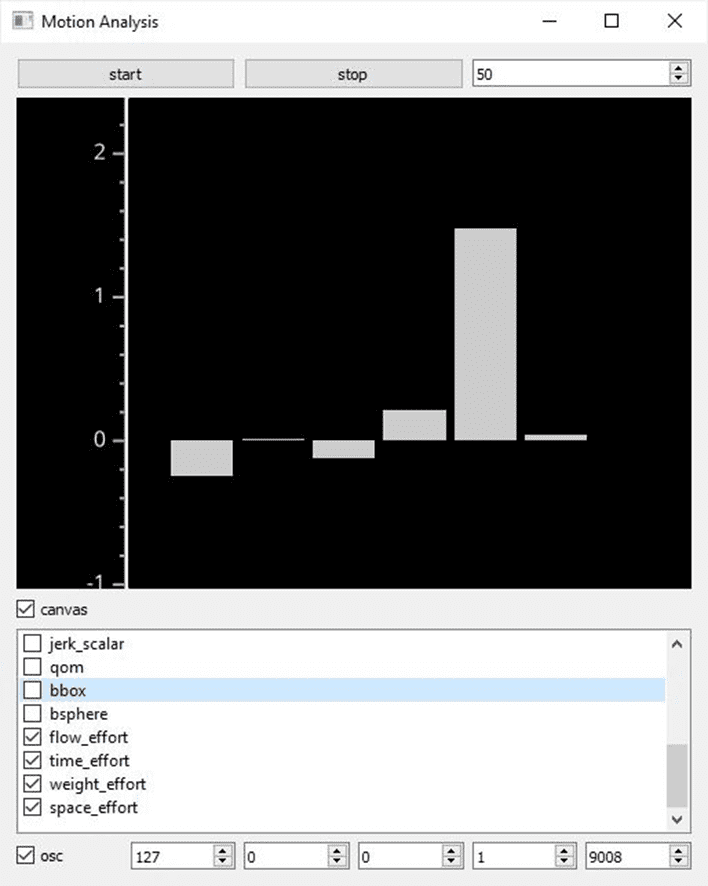

The movement analysis tool forms the basis for establishing a strong connection between expressive aspects of dance, music, image, and light. The tool derives from raw motion data medium and high-level motion descriptors that convey information about qualitative aspects of movement. Among others, the tool analyses higher order derivatives (acceleration, jerk) of linear and rotational motion and the four Laban Effort components (Time Effort, Flow Effort, Space Effort, Weight Effort).

The movement sonification tools allow the translation of movement into music and play a prominent role in the piece

enhancing the experience of the dance techniques developed for the piece. One of this movement approaches is the

cataleptic movement inspired in Dr. Milton Erikson hypnotic techniques, which consists in the suspension of voluntary

movement characteristic of trance states. A major challenge consisted in adapting the sonification tools to this type of

movement experienced by one of the dancers, the one playing the role of the mista who is manipulated by the Iatromantis. A cataleptic sonification is developed using human voice and wind instruments synthesis that translate movement qualities into music. Some of the Iatromantis choreographies are as well sonified using neon standard synthesis techniques such as dynamic stochastic sound synthesis.

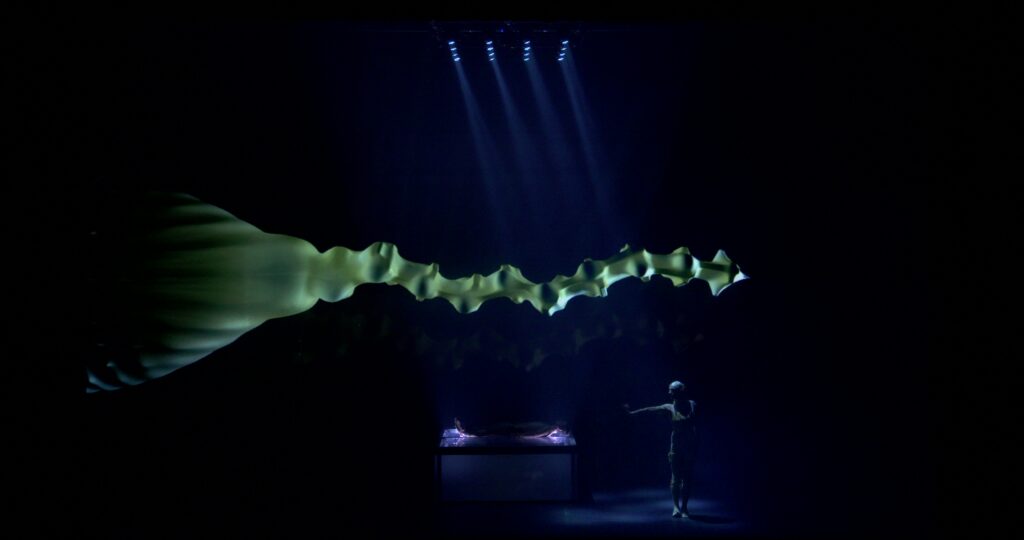

The movement synthesis tool that has been extensively used in Incubatio is a physics-based simulation that generates

expressive behaviors for articulated bodies with arbitrary (including non-anthropomorphic) body architectures. With this

tool, snake-like entities have been designed that exhibit through their movements several idiosyncratic movement qualities of choreographer Muriel Romero.

that forms part of the Dance Creation Environment.

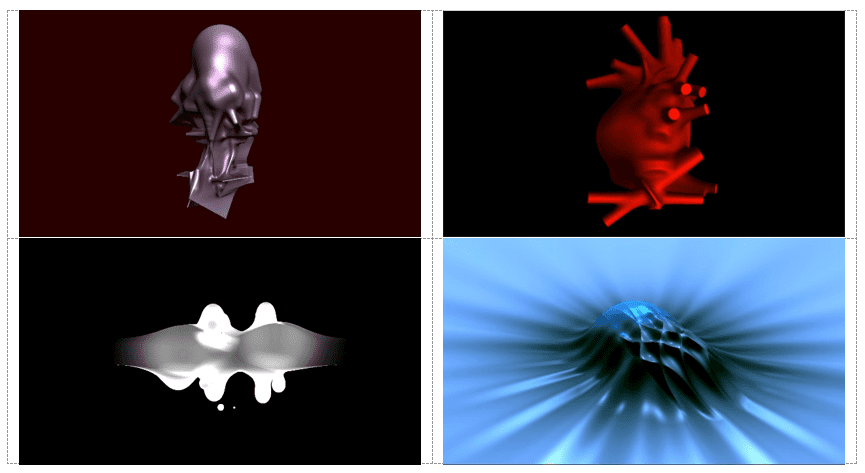

The ray marching visualization tools of the Dance Creation Environment played a central role in the development of this

production. This tool generates reinterpretations of the archetypal images that have been recurrent throughout history

in dreams and visions of individuals across many epochs. These images are brought forth by an unconscious and collective part of the human being and become occasionally accessible through dreams or altered mental states. The visualisation tool has been adapted to generate archetypical images of Mercurio, Magnetic Water, Mandalas, and Scintilla as well as images that are reminiscent of organic structures such as the Brain or Heart. These images are shown in the piece as video projections on the scenography where they manifest both as real time visualisations or avatars of one of the dancers and as immersive environments.

From left to right and top to bottom: Mercurio, Heart, Scintilla, Magnetic Water.

The light design of the piece is composed of 24 robotic lights placed on a grid placed above a bed on which one of the

dancers lies. This bed is also illuminated. All lights are controlled during the performance either by algorithms such as

swarm simulations or stochastic functions, or directly through the movement of the dancers. In addition to these moving

lights, two laser projectors are used as well, both creating patters controlled by generative simulations or by the movement of one of the dancers.

Incubatio is a form of artistic dissemination the potential of the Dance Creation Environment to support a production with rich in choreographic and dramaturgical complexities. It makes extensive application of tools related to several disciplines such as movement, music, visual arts or light.

CREDITS:

Conception and idea: Pablo Palacio and Muriel Romero

Choreography: Muriel Romero in collaboration with the dancers

Music: Pablo Palacio

Choreographic Assistance: Arnau Pérez

Dancers: Gaizca Morales and Arnau Pérez

Interactive visual simulation: Daniel Bisig

Lighting and scenic space: Maxi Gilbert and Pablo Palacio

Costumes: Raquel Buj

Digital visualization: Daniel Bisig and Pedro Ribot

Motion capture: Pedro Ribot

Interactive sonification: Pablo Palacio

Software and interactive technology: Daniel Bisig, Pablo Palacio, Fernando Fernández y Pedro Ribot

Light and laser programming: Pablo Palacio, Daniel Bisig and Pedro Ribot

Production: Instituto Stocos

Supporters: EU-Horizon Programme, Teatro Museo de la Universidad de Navarra, Coliseu do Porto, Alhóndiga-Azkuna Zentroa, L’animal a l’Esquena.