by Maximos Kaliakatsos

The Athena Research Center (ARC) in Athens, Greece, is working with archives available in the PREMIERE project, applying audio content analysis to dance and theatre recordings. The aim is to enrich the way we understand performances and improve accessibility, using AI, language technologies, and advanced visualizations.

First results that incorporate all modalities of interest have been obtained.

ARC has already developed the first version of algorithms that will provide the backbone of speech, text, music and audio applications in all use cases of PREMIERE. These methods involve mainly pretrained deep neural networks that are being fine-tuned to perform best in all languages and the domain of application (theatre and dance). Additionally, ARC is working on effective ways to visualize information coming from audio, video and 3D capturing, on screens of spectators.

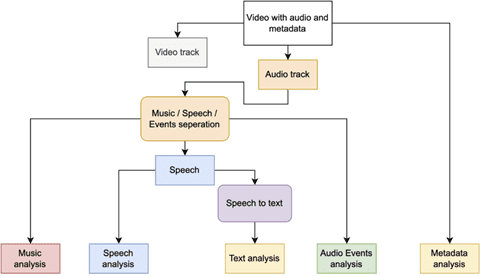

Overall workflow of audio, music and text analysis framework. © Athena Research Center

Specific challenges that are tackled by those algorithms are the following:

Music / speech / sound events separation

It is crucial for the algorithms of each modality to be applied to relevant content. For example, analysis of music should be performed on segments that clearly include music – similarly for speech audio. Whether the audio comes from audio files or from live streaming, the aim of this challenge is to provide clear separation for subsequent tasks. Along with music/speech separation, sound events will be also detected (e.g., door closing, thunder strike, etc.) and annotated.

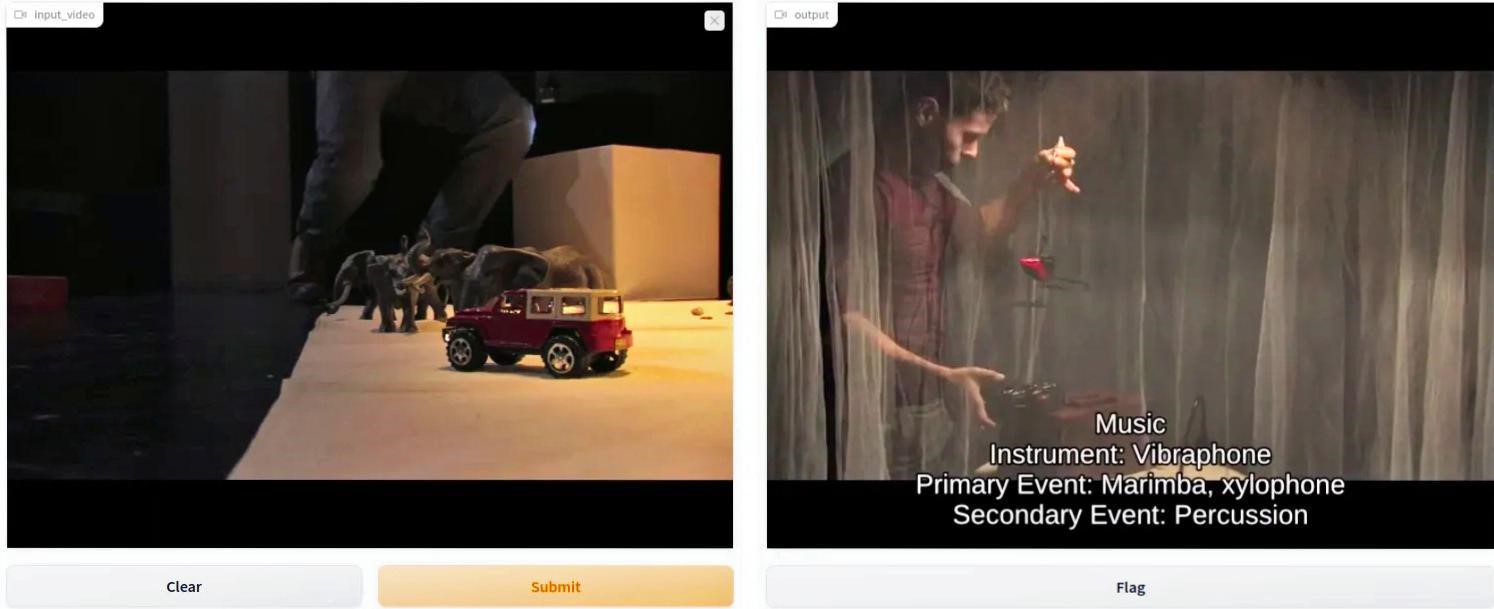

Screenshot of input video (left) and audio-analyzed video (right) © Athena Research Center

Music analysis

Identified music, either coming from theatrical performances or dance, is automatically annotated by algorithms according to style, beat, tempo, involved instruments and emotion, among others. Synchronized visualizations of such information will be available for viewers in real time, during video playback or real-time performance, especially focusing on improving accessibility in hard-of-hearing spectators.

Speech and text analysis

The speech signal of artists in a performance will be transcribed to text. Emotion and sentiment analysis will be performed on both text and speech, enabling the retrieval of sentiment-specific segments in the archives, while providing hard-of-hearing spectators access to subtle sentiment information that may be conveyed from the prosody. The challenges here concern the application in multiple languages and the domain of dance and theatre, since pretrained models are available mainly for English and conversation-like prosody.

Metadata analysis

Each artistic performance comes with a set of metadata that may be crucial for spectators or researchers. Furthermore, the audio and text analysis methods described above provide additional information regarding entities within the performances that might not be referred to in the metadata (e.g., musical instruments taking part in a song within a theatrical play). All these information become available to all user groups, along with active URLs that lead to external resources with relevant information.

Towards visualizing information

Having obtained all this amount of information, not only from audio but also from video and 3D capturing, provides the opportunity to all user groups to have multiple viewpoints on the artistic performance. It is a challenge, however, to develop an effective, non-overwhelming way of providing this information to the user. The methods that are being developed for tackling this challenge leverage cutting-edge UI principles and Virtual Reality visualization techniques.