In the context of the Premiere project, Instituto Stocos develops an AI toolbox to support the creative processes of artists working in performing arts such as dancers, musicians, creative coders, light designers, and visual artists. The toolbox comprises algorithms and machine-learning models that offer the possibility to interact with and generate synthetic media in novel ways. Examples of interactive applications include the generation of synthetic motions for virtual dancers, the sonification of bodily expressions, the translation of movements into synthetic images, or the control of stage lights.

So far, we have developed early prototypes of some of these tools and are currently evaluating them in a series of workshops. These workshops take place at dance institutions in Spain such as Datzagunea (Errenteria) and the Conservatorio Superior de María de Ávila (Madrid).

During the workshops, the participants could experiment with two generative machine-learning models. The two models have been trained on motion capture recordings of movement qualities by choreographer Muriel Romero. The models are able to generate synthetic motions in real-time for a single dancer.These synthetic motions are novel while sharing some characteristics of the originally recorded motions.

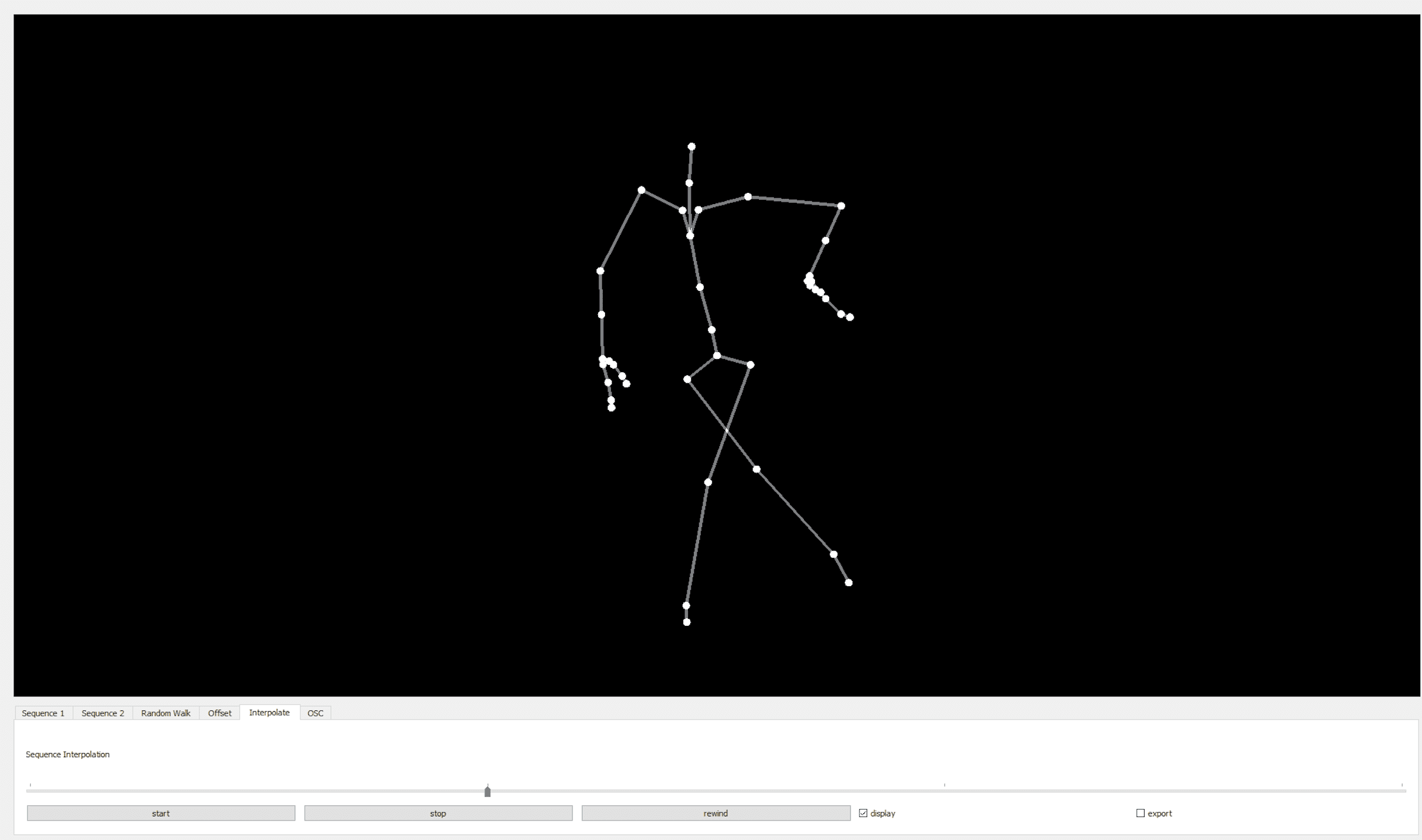

The first model is an autoencoder and can be used to create variations of existing motion sequences through transformation and interpolation of motions encodings.

Preview of Dance Autoencoder Application

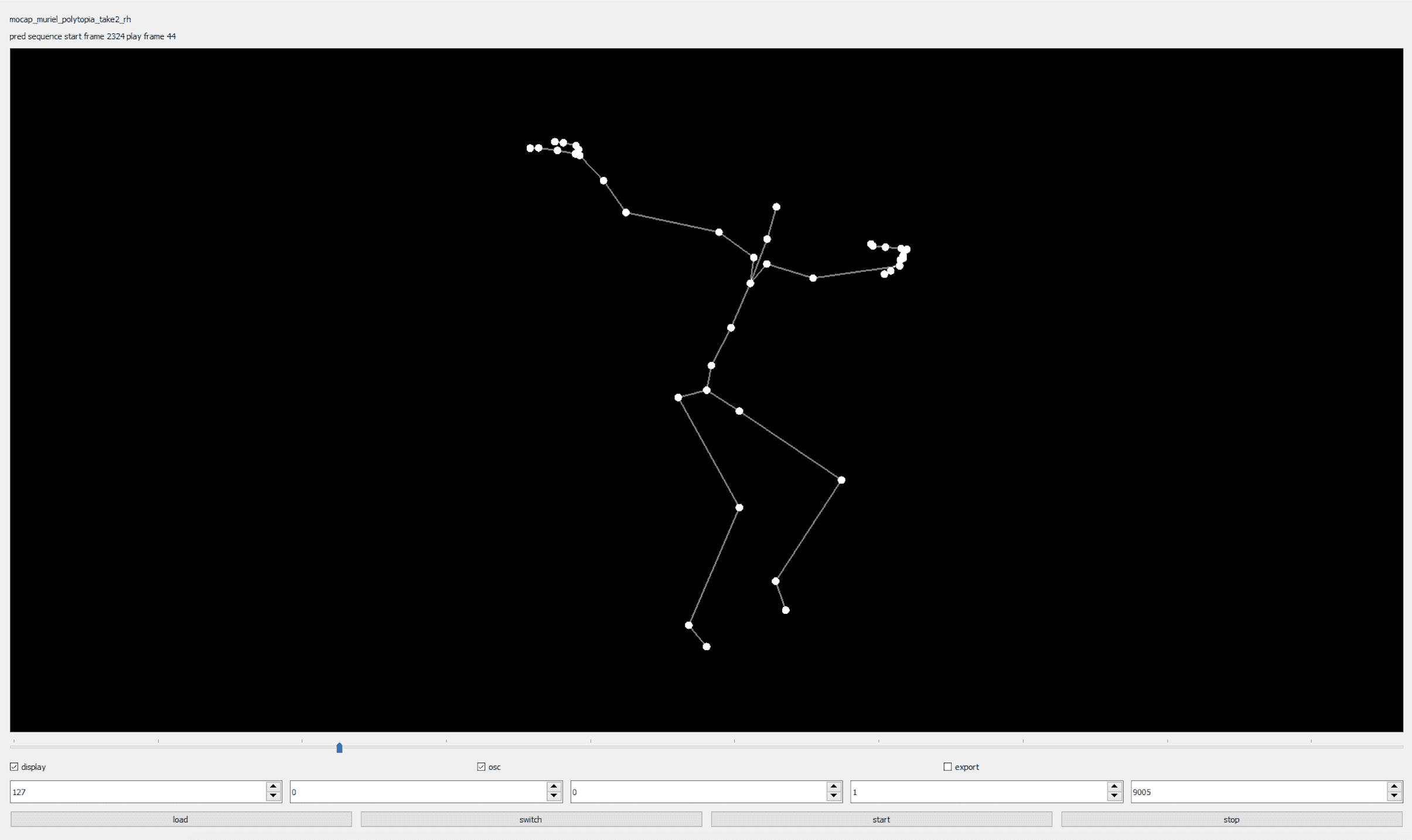

The second model is an autoregressive system and can be used to create continuations of existing motion sequences. Both models are integrated into user friendly applications that can be integrated into creative workflows that deal with motion capture data.

Dance Sequence Continuation Application

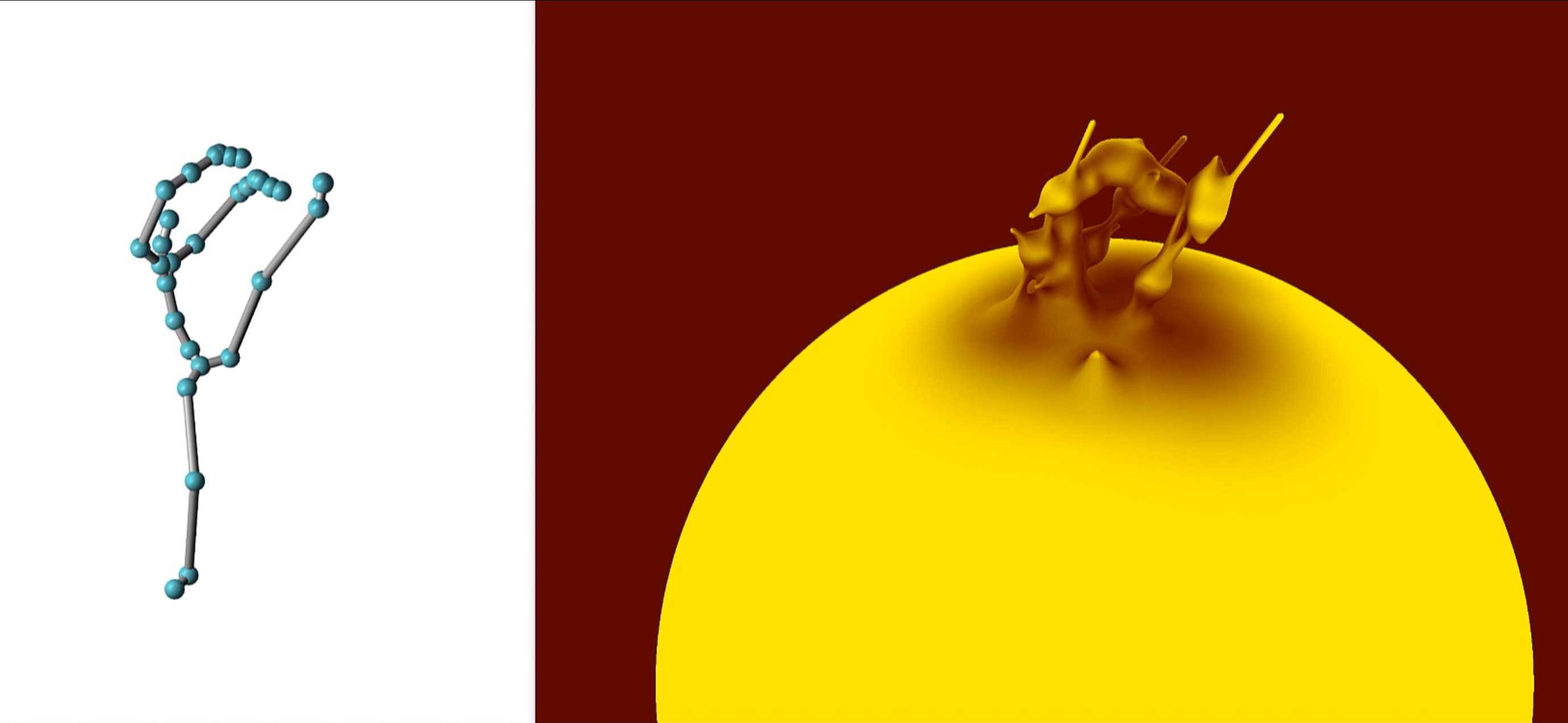

The workshop participants were also able to experiment with non-anthropomorphic visualisations of motion capture data obtained from the publicly available Instituto Stocos dataset (https://zenodo.org/record/7034917). These visualisation are created using the “Ray Marching” rendering method. Following this method, the body joints and edges represented in the motion capture data are represented as signed distance functions. These functions are combined to generate smooth surfaces that vary in appearance between a faithful reconstruction of the original motion capture skeleton and highly amorphous shapes.

Non-anthropomorphic visualisations of motion capture data prototype

Furthermore, the workshop participants could experiment with several interactive sonification methods that translate body movements into synthetic music. Two groups of sonification methods were employed for this purpose, those that employ realistic sound synthesis techniques and those that operate with non-standard techniques.

Interactive sonifcation experimentation with Hip Hop dancer Maialen Mendes at Dantzagunbea Workshop

Finally, the participants were invited to provide feedback through several questionnaires and to thereby contribute their points of views to the future development of the Premiere project.