AI tools for dance creation

Converging physical and artificial forms of embodied creativity

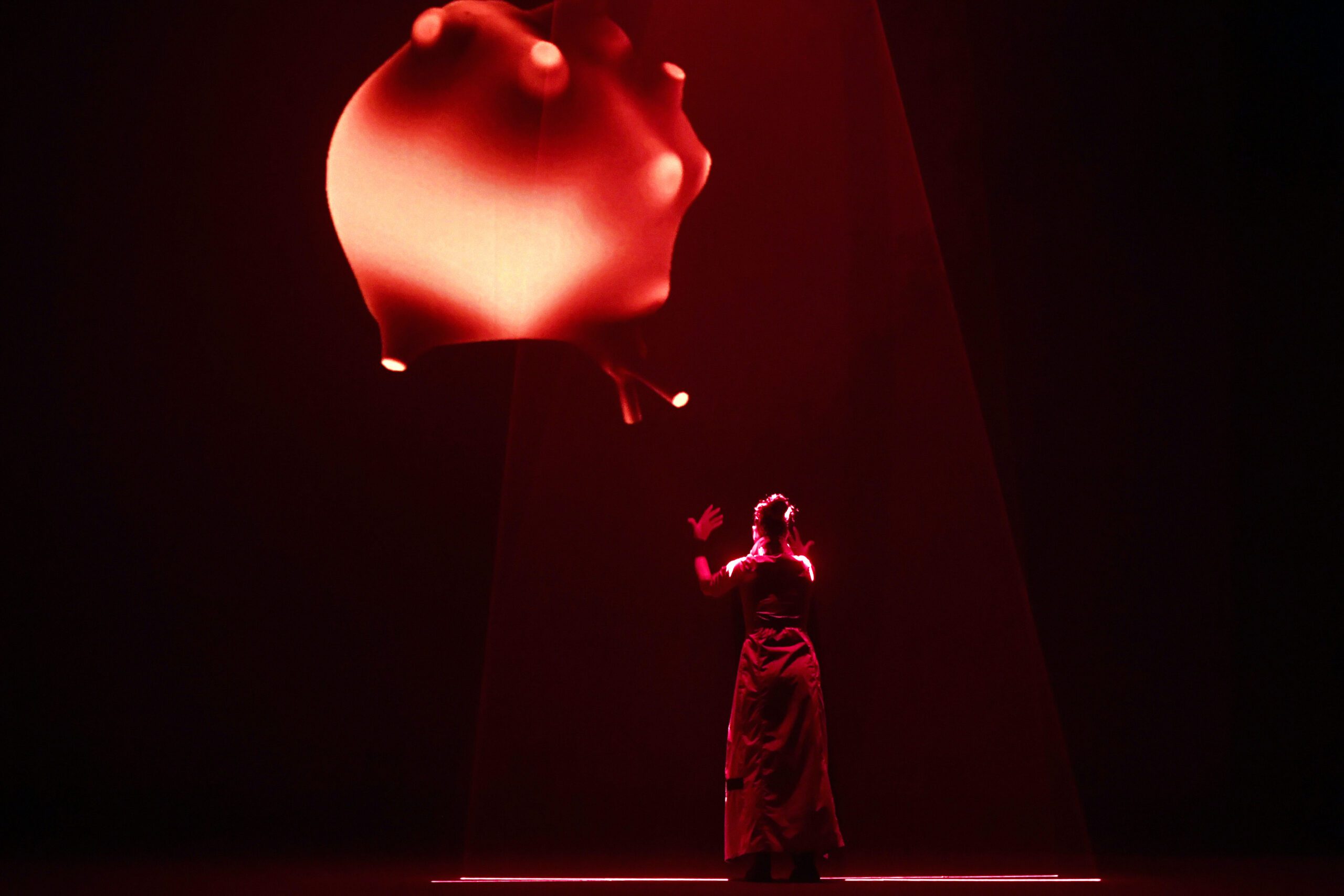

Dance and choreography are embracing technology in a range of different forms. Aiming to interact on-stage, enrich embodied knowledge, get inspired and experiment with computer-aided choreography or interactively generate content; exploring the potential of technology for dance is seen as a quest for creative applications, a reflection on the relationship and an endeavor to amplify movement through other sensory modalities. We research the possibilities to transform dance movement data into sound and image through the collaboration between the body and the algorithm, envisaging new opportunities for the analysis, creation and transmission of dance.

Our goal

Analyse body movement and create generative sonification and visualisations.

Scope of application:

Motion capture analysis

Obtain and analyse motion data

Movement-based synthesis

Generate synthetic movement

Generate synthetic sound

Generate synthetic images

Technologies

The term generative art here refers to artistic methods that involve delegating the creation of an artwork to a partially or fully automated process. The AI-Toolbox that we are developing is a set of tools that transform the body movement to sound and images. Using both machine learning (ML)-based and explicit algorithms, the tools combine programmed instructions and capturing of nuanced and idiosyncratic aspects movement. The generation of sound and images is inspired and expressed by natural elements and patterns, qualitative and quantitative movement properties, and non-anthropomorphic avatars.

Motion capture analysis

Tools for obtaining and analysing motion capture data

- Motion capture: combination with XSens system or affordable pose estimation from video

- Motion capture player: tool for reading and playing motion capture recordings

- Motion analysis: a set of explicit algorithms to derive from raw kinematic measurements higher level quantitative or qualitative movement feature.

- Motion clustering: group motion excerpts according to their movement features

Motion analysis © Instituto Stocos 2023

Movement synthesis

Tools for generating synthetic movements

- Movement Autoencoder: transforms existing movements into novel movements by interactively navigating the model’s latent space

- Movement Continuation: predicts future poses based on a sequence of previous poses, implementing a recurrent neural network

Movement sonifications © Instituto Stocos 2023

Sound synthesis

Several different sound synthesis techniques adapted for interactive movement sonification

- Non-standard sound synthesis: dynamic stochastic synthesis models in which amplitude and time values that conform a waveform are defined by probability functions.

- Physical Modelling Synthesis: Simulate bowed string sounds, a membrane and the air flow in a tube.

- Additive/Subtractive Synthesis: Create very realistic vocal sounds in multiple tessituras, percussion sounds and string sounds.

- Audio Autoencoder: Transform existing waveforms into novel waveforms by blending their respective encodings.

- Audio Deep Dream: Applies the Deep Dream principle to generate audio.

- Motion to Sound Translation: Translate motion into audio with a recurrent neural network.

Non-Standard Sound Synthesis © Instituto Stocos, 2023

Image synthesis

Three tools for image synthesis

- Raymarching: renders synthetic or captured motion capture data of a single dancer as abstract images

- Image Autoencoder: transform existing images into novel images and animations

- Image Deep Dream: implements the Deep Dream technique to generate synthetic images

RayMarching Image synthesis © Instituto Stocos 2023

ONLINE DISCUSSION WEBINARS

Friday 4/4/25, 12:00 CET: Performance and archive: curatorship and mediation in the digital era with Ezequiel Santos and Gonçalo Amorim, featuring Hélia Marçal

Thursday 10/4/25, 14:00 CET: Hybrid dance and theater co-creation, knowledge transmission and rehearsal in XR spaces with Suzan Tunca and Depy Panga.

Friday 11/04/25, 16:00 CET, mēkhanḗ: Exploring Human-Machine relationship in the performing arts with Erik Lint and Pablo Palacio

Join us and be part of the discussion!

In practice

The AI-Toolbox is developed to support an open and modular creative methodology. We aim to promote the use of the tools by creators with diverse technical skills, discipline backgrounds and objectives.

The first step to work with the tools is obtaining movement data with motion capture. For this, the toolbox foresees methodologies to obtain partial or full body pose information, either through commercial motion capture or by analyzing camera images from a live video stream. The analysis of low-level kinematic data is based on the Laban Movement Analysis (LMA) system, and it allows to describe movement principles and aspects that relate to the dynamics, energy, and inner intention of movement, all of which contribute to the expressivity of movement.

Motion data serve then as a basis for a series of creative applications of the tools: creating an artificial dance partner, generating synthetic sounds and images in real-time for media-augmented performances or receiving multimodal feedback to increase their awareness of body movements.

Interoperability and modularity

The AI-Toolbox is designed to be modular, meaning each tool can work on its own or with others, thanks to the OSC protocol. This protocol is crucial for real-time sound and media control and makes it easy to integrate the AI-Toolbox into creative coding and computer music setups, standalone applications that run on open-source tools or integrated into bigger systems that send and receive control commands.

Take it as it is or hack the code

The implementation supports users with different levels of technical expertise. Users with little technical expertise can run the tools as standalone applications, users with some technical expertise but no programming skills can retrain the models and/or modify the configurations of the tools, and users with some programming skills can modify the functionality of the open-source tools or integrate the algorithms and models into their own software.

A new performance creation will be presented in Coliseu in 2025 to showcase the tools, meanwhile, we are conducting workshops to disseminate and try out the tools with dancers, choreographers and creative coders.

Who participates?

- Instituto Stocos

- Athena Research Center

- IDlab / Amsterdam University of the Arts