Performing XR

Exploring presence and connection in virtual environments

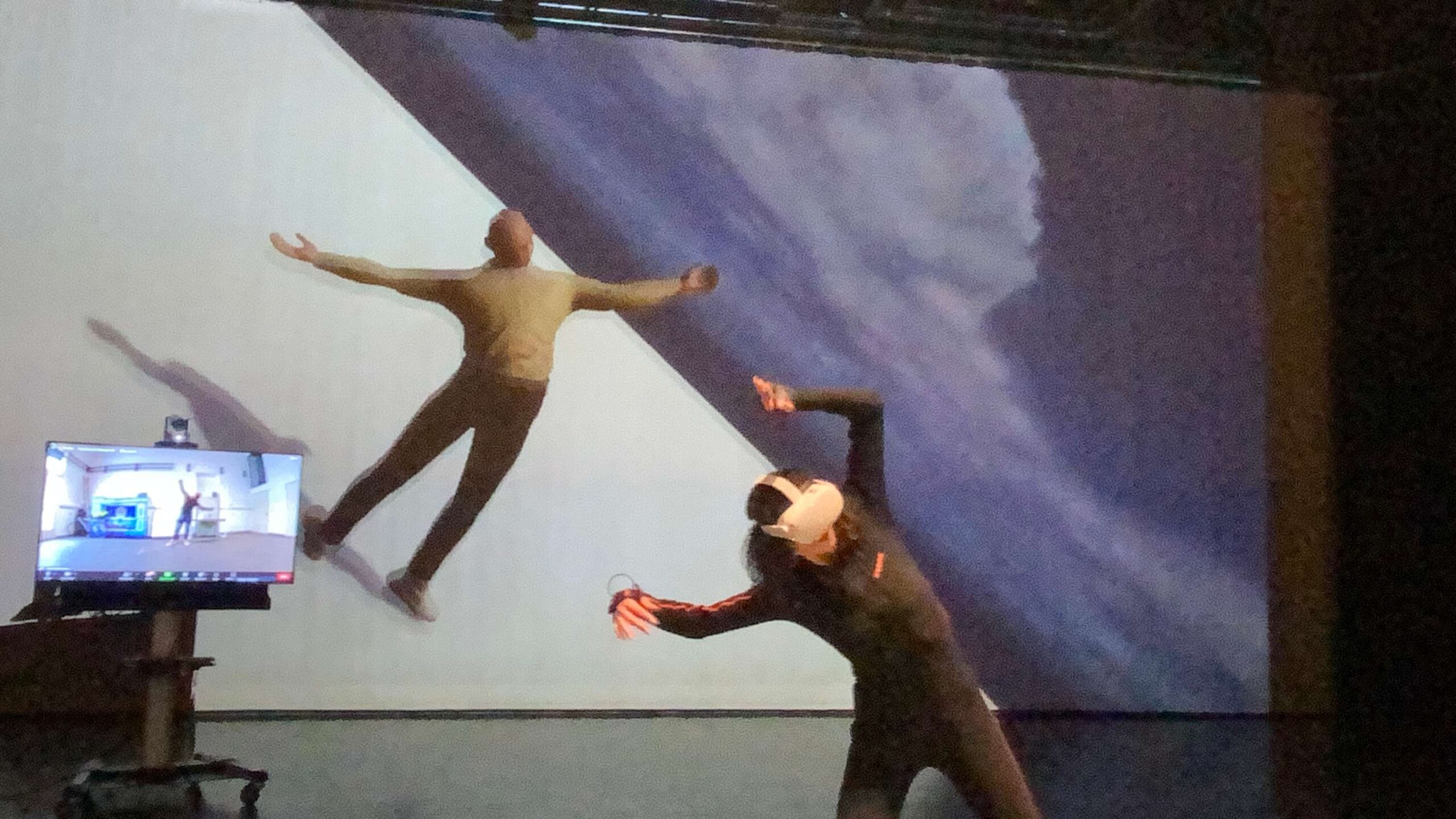

Immersive experiences built upon extended reality technologies are opening up a new type of interaction between performers and the audience. The different modalities of presence and connection pose the question: How can we use XR technologies to extend the stage and create meaningful tools for the theatre and dance practice? Our research is focused on the possibility of leveraging attendance barriers, so that people can enjoy a work without being physically present, and tackling the technical skills related challenges, for venues and creators to be able to make use of these technologies in their own practice.

Our goal

Performance capturing and streaming, including interaction

Capturing performances

Protocol for the operationalisation of dance and theatre capture

3D Virtual Theatre

Streaming live performances

Connecting users from remote locations

Technologies

The extended reality umbrella includes a series of different technologies from motion capture to online streaming. They are extensively used in the film, entertainment industries as they allow to build virtual environments and interact withing them. What we are aiming in Premiere is to facilitate real-time interaction between remote performers and the virtual environment, and implement efficient streaming capabilities to distribute the content to remote viewers via VR and web browsers.

Performance capture protocol

Comprehensive protocol for making informed decision on the choice of capture systems, based on the needs and limitations of a theatre or dance performance considering the systems that record the different properties of a performance, detecting movement, light, sound, facial expression, eye movements and even the voice of actors.

- Motion capture: Capture human bodies using external cameras or sensors placed around the tracking area (Outside-In) or on the tracked body (Inside-Out).

- Audio modalities: For spatial audio, isolated voice or studio output

- Lightning: Surrounding light (light probes), luminosity range (High Dynamic Range), reflectance fields

- Props: Track props in real time with optical markers, or then they move in and out of a scene with RFID tags.

- Facial expression: Head mounted rig, stereo and/or depth cameras for facial movements.

- Data management: Local synchronisation, standardized transmission, storage

Motion capture with sensors © by CYENS, with Jeroen Janssen and Rodrigo Ribeiro, 2023_compress

Avatars creation

Creating virtual representations of the performers

Considering processes for representing the morphology and the behaviour of performers

- 3D modelling: Creating the morphology and appearance of the 3D models

- Rigging: Enabling realistic movement and deformation of 3D characters or objects

- Animation: pose-based models utilizing kinematic chains, or volumetric capture recording a person’s entire form and movement

Avatars animation © CYENS 2023

VR streamingHow do users see the VR environment, and how is this transmitted? Content delivery in XR will allow streaming of a performance and also the interaction of multiple performers connected from different locations.

|

3D scene modelling and editingCreating a virtual environment that hosts XR performances 3D modeling techniques and specialized gaming engines

|

In practice

As XR technologies are not yet widely used in the performing arts, there is still a need for expertise and resources in employing 3D modelling and VR technologies among creatives in theatre and dance CCIs. While there are a lot of solutions out there, the options of using them are not yet standardized, making their adoption an experimental and often costly endeavor. We focus on proposing a process for their use and developing an environment for their deployment, to make the use of XR technologies in the performing arts more accessible to venues and creators

Performance capture and mocap systems

The first aspect of what we are working on has to do with performance capture, and the creation of a protocol for the use of the different solutions. We have been looking around and testing several solutions that allow us to capture – record in a more traditional terminology – the different assets of a work: the performer’s motion, speech and facial expressions, the props and the lights of the stage, the music/sound that is played.

The challenge here relies on making the right choice for the specific needs of a work and its production, as well as the distinct requirements for dance and theatre captures. Fundamental considerations have to do with available financial resources, the level of intrusiveness associated with a performance capture system that may affect the freedom of movement, the scale of a work and the number of performers, or the need for facial fidelity, are aspects that have to inform the final choice.

Specifically, about motion capture, the CYENS team conducted tests with commercial motion capture suits (Xsens, Rokoko) together with dancers and choreographers from ICK, while the UJM team, focused on marker less motion capture techniques, with external cameras, and on creating a live Data Motion Dataset that introduces occlusion, lightening and facial expressions variations.

Overall, performance capture in PREMIERE involves a) data collection of performers and their environment from the different physical locations, b) local synchronization of these data, c) transmission of data in standardized formats through a network to the virtual theatre state synchronization server which then d) ensures all performers experience the same state and e) storage of appropriate data into the CMS.

VR streaming

The 3D virtual theatre is the venue that will host all the applications of Premiere, including the VR media streaming platform, a streaming channel for the transmission of live VR events. In other words, it provides the stage to stream a performance with the audience connected from a remote location, or for the encounter of performers.

Our approach includes developing a networking architecture to facilitate real-time interaction between remote performers and the virtual environment, implementing efficient streaming capabilities to distribute the content to remote viewers via VR and web browsers, integrating human avatars animation and 3D scene modelling. Moreover, we’re tailoring user experiences based on their roles; audience members will enjoy distinct privileges and access rights compared to performers. The challenges include synchronisation of users, synchronisation of different data type, and finding the balance between image quality, latency and system resiliency.

Institutions and creators will find in Premiere both a guide for adopting and combining systems for capturing a theatre or dance performance, and an infrastructure that supports interaction between performers and VR streaming for the audience. This is how we wish to empower the organisations to host virtual environments, as an extended set for the creation and distribution, and to provide a stage where artists can create and the audience enjoy.

Who participates?

- CYENS Centre of Excellence

- Athena Research Center

- Medidata.net – Sistemas de Informação Para Autarquias

- ICK Dans Amsterdam

- Coliseu Porto Ageas

- Argo Theater